Building a Simple Web Scraper: Using BeautifulSoup to Scrape Data from Websites

Introduction

In today's digital age, data is the new gold. Companies, researchers, and developers rely on vast amounts of online data for decision-making, trend analysis, and business intelligence. But what if you could extract the data you need directly from websites, without manually copying and pasting? This is where web scraping comes into play.

In this article, we will explore how you can build a simple web scraper using Python and BeautifulSoup, delve into some real-world use cases, and understand the ethical considerations involved in web scraping.

What is Web Scraping?

Web scraping is the process of automatically extracting information from websites. Instead of manually browsing and copying data, a web scraper can fetch, parse, and store data efficiently. Web scraping is commonly used for:

- Price comparison – Aggregating product prices from multiple e-commerce websites.

- News aggregation – Gathering the latest headlines from different news portals.

- SEO analysis – Extracting keywords and metadata for content optimization.

- Job postings – Collecting job listings from various recruitment sites.

- Research and academic purposes – Analyzing trends and extracting insights from open datasets.

Setting Up the Environment

To get started with web scraping in Python, you will need:

- Python installed on your system.

- The following Python libraries:

requestsfor fetching webpage content.BeautifulSoupfor parsing HTML data.pandas(optional) for storing and analyzing extracted data.

You can install the necessary libraries by running:

Writing Your First Web Scraper

Step 1: Import Required Libraries

Step 2: Send a Request to the Website

Step 3: Parse the HTML Content

Step 4: Extract Specific Data

For example, to extract all article titles from a news website:

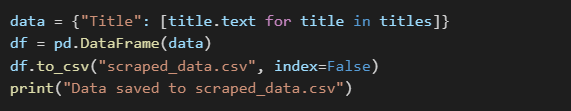

Step 5: Store the Data

You can store the extracted data in a CSV file using Pandas:

Case Study: Web Scraping in Action

A data-driven startup wanted to analyze competitor pricing trends in real-time. They built a web scraper to fetch prices from different e-commerce sites every day. By analyzing the data, they could adjust their pricing dynamically, resulting in a 15% increase in sales within a few months.

Ethical Considerations and Legal Aspects

While web scraping is a powerful tool, not all websites allow it. Here are some key points to consider:

- Check the website's

robots.txtfile to see if scraping is permitted. - Respect the site's terms of service and avoid scraping sensitive/private information.

- Use API endpoints if available, as they are often more efficient and legally safer.

- Avoid overloading a server by making too many requests in a short time.

Future of Web Scraping

With advancements in AI and Natural Language Processing (NLP), web scraping is evolving. Tools like Scrapy and Selenium allow for more sophisticated data extraction, and machine learning models can analyze and interpret scraped data better than ever.

Conclusion

Web scraping is an essential skill for anyone working with data. Whether you want to analyze trends, extract insights, or automate repetitive tasks, Python and BeautifulSoup provide an easy and efficient way to fetch web data. However, always ensure that your scraping practices are ethical and comply with legal guidelines.

Want to Take It Further?

If you're interested in advanced web scraping, consider:

- Using Scrapy for large-scale projects.

- Implementing Selenium for scraping dynamic JavaScript-based content.

- Applying AI-powered sentiment analysis on scraped text data.

Happy scraping🚀

No comments:

Post a Comment